Intro What Is MCP

Base my understanding, I try to use simple way to explain what is MCP (Model Context protocal).

The I/O

Input Output model is the only truth rule in computer. All action are Input and Output.

WebAPI

Like calling Web API, for example calling weather api:

Input: Query location.

Output: Json response with target location weather.

SQL

Other example work with SQL:

You always need to input SQL query like SELECT * FROM SOMETABLE, and then the SQL client will return back the table result.

Input: SQL Query

Output: Table result

All the action in computer are I/O model.

The LLM era

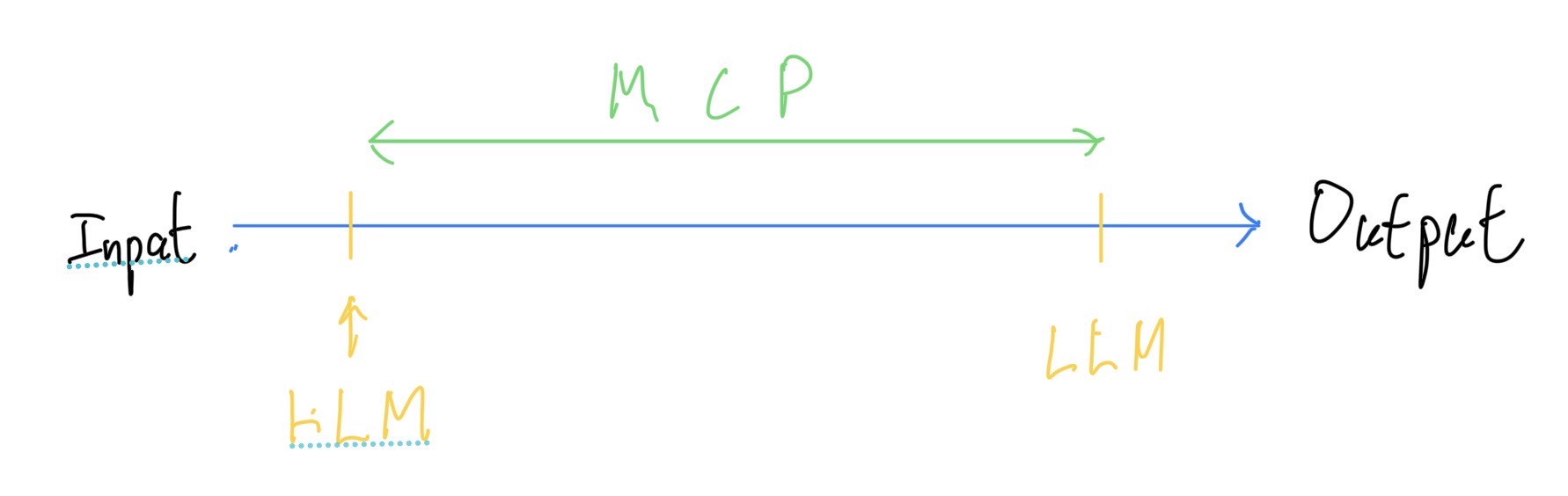

Now, we are in LLM era. What if…What if we inject LLM in to the I/O process like this:

You may notices that it like a middleware. Inject LLM after input and before output. I think this is the most simple ways to explain what MCP doing.

Let us look back with the API example. To see what changes.

WebAPI

Before you need to follow the api documents and input exctly what the api query is. Like you need to input exctly location=HongKong and then pass to api url like: http://someapiurl?localtion=HongKong. That the only way to call weather api.

But now, because you inject the LLM, you can input human nature language:

Input: I want to know Hong Kong weather now.

Then LLM will understanding your input and extract the params “Hong Kong” and fill into api call.

And now Output, from weather api response, we may get sometings like:

json code snippet start

{"localtion": "HongKong", "temperature": "26C"}json code snippet end

Now cus we Inject the LLM on the Output, so rather then this boring raw json, LLM will read the output, in this case JSON text, to make a human nature language like output.

Output: HongKong temperature is now 26C.

The MCP

Now the question is: How the hack LLM know call which weather API Right? There’re 1000X weather api on the world, how LLM know call which one? That is the key about the MCP.

MCP server

To make sure LLM is calling your weather API, you need to make it as a tool by MCP server to expose to LLM.

So using MCP you need to build MCP server first. Details you can read the offical documents.

A short example for my weather api. I am uing Hong Kong Observatory open api:

python code snippet start

import json

import requests

from mcp.server.fastmcp import FastMCP

from datetime import timedelta

mcp = FastMCP("WeatherServer")

@mcp.tool()

async def get_general_situation():

url = "https://data.weather.gov.hk/weatherAPI/opendata/weather.php"

params = {

"dataType": "flw",

"lang": "tc"

}

response = requests.get(url, params=params)

data = response.json()

general_situation = data.get("forecastDesc", "未能取得天氣概況")

return json.dumps({"generalSituation": general_situation})

if __name__ == "__main__":

mcp.settings.host = '0.0.0.0'

mcp.settings.port = 8080

mcp.run(transport="sse")python code snippet end

get_general_situation is my API endpoint. And added with @mcp.tool() decorator it will make MCP server to expose this function as tools and pass to LLM on MCP client, which talk this later.

Transport

Now the important details: mcp.run(transport="sse")

In MCP, currently have two offical way to connect MCP server:

- sse

- stdio

For sse, I will not go to explain what this is. but I will tell you this is the standard way to connect outside MCP server. There a lots of company already provide MCP server.(Just like API server before). you will see those server link will end with /sse. Then you know that maybe is the MCP server.

So use sse better for mcp server is on another location or other server. But you still can use on local. Just up to y

ou.

stdio is for local fast and simple. Details you can read the Documents.